温馨提示:这篇文章已超过450天没有更新,请注意相关的内容是否还可用!

摘要:本文介绍了云计算领域中的Hadoop 2.x完全分布式集群的入门知识。文章简要概述了Hadoop的基本概念及其在云计算中的应用,重点介绍了如何搭建Hadoop 2.x的完全分布式集群环境,包括硬件配置、软件安装和配置过程等。对于初学者来说,本文提供了必要的指导和建议,帮助读者快速入门Hadoop分布式集群的搭建和管理。

Hadoop搭建集群

- 涉及的工具、软件、包

- 网络:IP、DNS

- java:安装、配置

- 免密前的准备

- 私钥、公钥

- 克隆、改名、改IP

- ssh免密登录

- 生成密钥

- 密钥发送

- 登录测试

- hadoop安装配置

- 0. 进入主节点配置目录/etc/hadoop/

- 1. 核心配置core-site.xml

- 2. HDFS配置hadoop-env.sh

- 3. HDFS配置hdfs-site.xml

- 4. YARN配置yarn-env.sh

- 5. YARN配置yarn-site.xml

- 6. MapReduce配置mapred-env.sh

- 7. MapReduce配置mapred-site.xml

- 集群分发配置文件

- 一、分发脚本xsync

- 二、集群分发配置

- 三、查看文件分发情况

- 集群:单节点启动

- hadoop01

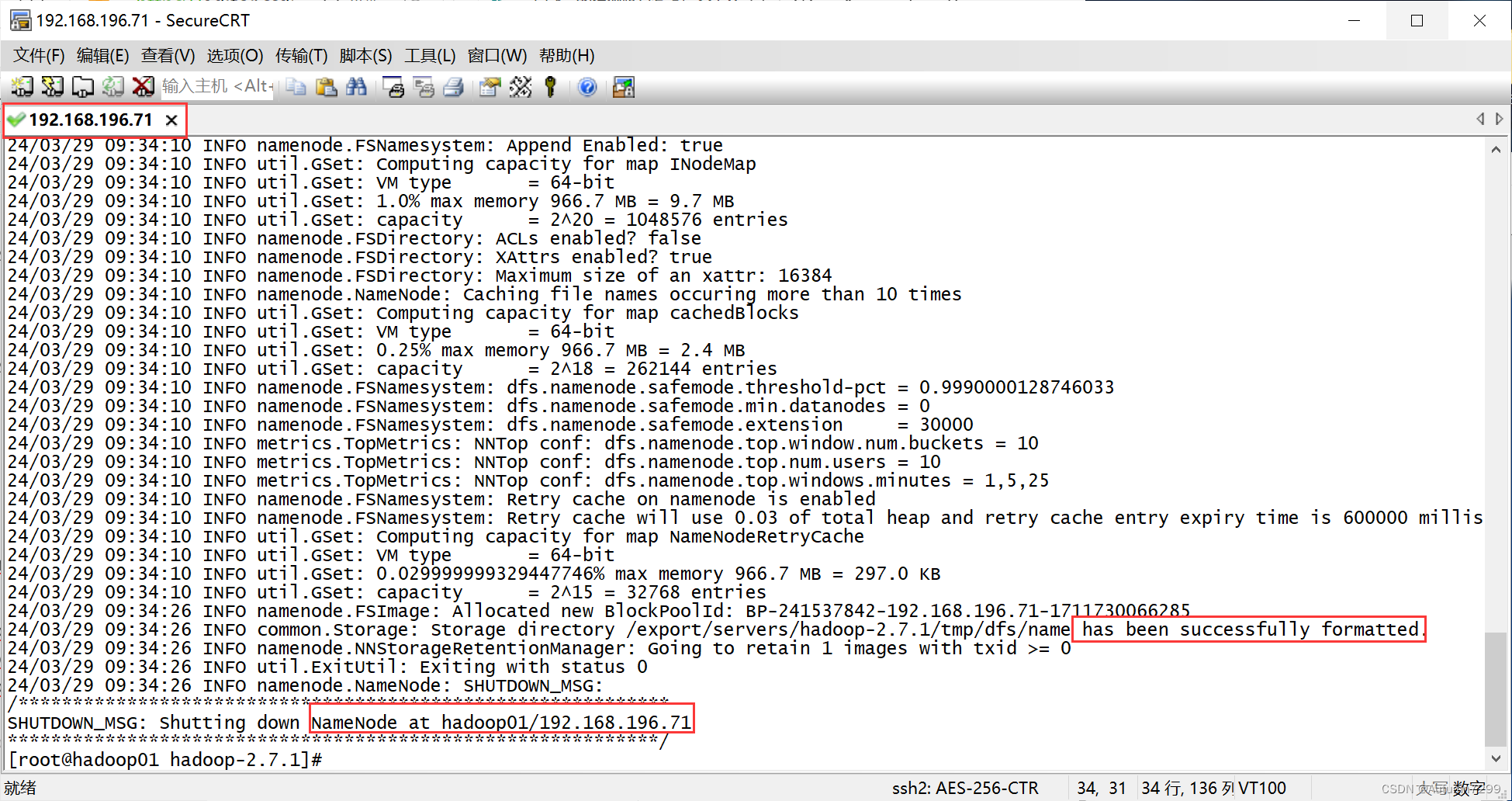

- 格式化

- 发现问题!!

- 启动 NameNode、DataNode

- hadoop02

- 启动DataNode

- hadoop03

- 启动DataNode

- 单启web测试

- 集群群启与配置

- 配置slaves

- xsync脚本同步

- 停止单节点与jps检查

- hadoop01启动HDFS(第1次,异常)

- 发现问题!!!

- scp拷贝同步

- hadoop01启动HDFS(第2次,正常)

- jps查各节点进程

- hadoop02启动YARN(第1次,异常)

- 发现问题!!!

- hadoop02启动YARN(第2次,正常,hdfs+yarn完整启动)

- Web测试

- HDFS:NameNode、DataNode

- YARN:ResourceManager

- 可能会遇到的坑(小结)

涉及的工具、软件、包

【虚拟机】VMware Workstation 16 Pro

【镜像】CentOS-7-x86_64-DVD-1804.iso

【java】jdk-8u281-linux-x64.rpm

【Hadoop】hadoop-2.7.1.tar.gz

【SSH远程】SecureCRTPortable.exe

【上传下载】SecureFXPortable.exe

网络:IP、DNS

配网卡ens33

[root@localhost ~]# cat /etc/sysconfig/network-scripts/ifcfg-ens33 TYPE="Ethernet" PROXY_METHOD="none" BROWSER_ONLY="no" BOOTPROTO="static" DEFROUTE="yes" IPV4_FAILURE_FATAL="no" IPV6INIT="yes" IPV6_AUTOCONF="yes" IPV6_DEFROUTE="yes" IPV6_FAILURE_FATAL="no" IPV6_ADDR_GEN_MODE="stable-privacy" NAME="ens33" UUID="fc9e207a-c97c-4d14-b36b-969ba295ffa9" DEVICE="ens33" ONBOOT="yes" IPADDR=192.168.196.71 GATEWAY=192.168.196.2 DNS1=114.114.114.114 DNS2=8.8.8.8

重启网络

[root@localhost ~]# systemctl restart network [root@localhost ~]# ping www.baidu.com

java:安装、配置

rpm安装 [root@localhost Desktop]# ls jdk-8u281-linux-x64.rpm [root@localhost Desktop]# rpm -ivh jdk-8u281-linux-x64.rpm warning: jdk-8u281-linux-x64.rpm: Header V3 RSA/SHA256 Signature, key ID ec551f03: NOKEY Preparing... ################################# [100%] Updating / installing... 1:jdk1.8-2000:1.8.0_281-fcs ################################# [100%] Unpacking JAR files... tools.jar... plugin.jar... javaws.jar... deploy.jar... rt.jar... jsse.jar... charsets.jar... localedata.jar... rpm文件安装完成后的默认路径为/usr/java/jdk1.8.0_281-amd64/ [root@localhost /]# vi /etc/profile 追加 export JAVA_HOME=/usr/java/jdk1.8.0_281-amd64/ export CLASSPATH=$JAVA_HOME$lib:$CLASSPATH export PATH=$JAVA_HOME$\bin:$PATH 更新 [root@localhost /]# source /etc/profile 查看java版本 [root@localhost /]# java -version openjdk version "1.8.0_161" OpenJDK Runtime Environment (build 1.8.0_161-b14) OpenJDK 64-Bit Server VM (build 25.161-b14, mixed mode)免密前的准备

私钥、公钥

私钥:留给自己即本机 公钥:发给其他计算机 位置:根目录的隐藏目录

[root@localhost ~]# cd .ssh/ [root@localhost .ssh]# ls id_rsa id_rsa.pub [root@localhost .ssh]# pwd /root/.ssh

克隆、改名、改IP

克隆三台 hadoop01 192.168.196.71 hadoop02 192.168.196.72 hadoop03 192.168.196.73

三台机都要做:👇

改ip: [root@localhost ~]# vi /etc/sysconfig/network-scripts/ifcfg-ens33 改名: [root@localhost ~]# hostnamectl set-hostname hadoop01 [root@localhost ~]# hostnamectl set-hostname hadoop02 [root@localhost ~]# hostnamectl set-hostname hadoop03 重启: [root@localhost ~]reboot

ssh免密登录

生成密钥

[root@hadoop01 ~]# ssh-keygen -t rsa Generating public/private rsa key pair. Enter file in which to save the key (/root/.ssh/id_rsa): Enter passphrase (empty for no passphrase): Enter same passphrase again: Your identification has been saved in /root/.ssh/id_rsa. Your public key has been saved in /root/.ssh/id_rsa.pub. The key fingerprint is: SHA256:pW1bexRKYdsv8tNjg5DBk7iYoTaOiX/9TksBqN5YIlo root@hadoop01 The key's randomart image is: +---[RSA 2048]----+ | o | | . o..+ | | . o ..=o o | | . . =+..+. o | | .Eo = oSooo+ o .| |..+ X . ..o.=.o | |.. = o. o. ..++.| | . . .o . ...o| | .. o+ | +----[SHA256]-----+ [root@hadoop01 ~]# ssh-copy-id localhost /usr/bin/ssh-copy-id: INFO: Source of key(s) to be installed: "/root/.ssh/id_rsa.pub" The authenticity of host 'localhost (::1)' can't be established. ECDSA key fingerprint is SHA256:sd4vCsEraYT7vqvsW4egMtl9ctOCt9SkHQQWe0jK6BM. ECDSA key fingerprint is MD5:04:f4:c4:4b:8a:ae:a5:cf:de:52:16:40:db:b4:17:fc. Are you sure you want to continue connecting (yes/no)? yes /usr/bin/ssh-copy-id: INFO: attempting to log in with the new key(s), to filter out any that are already installed /usr/bin/ssh-copy-id: INFO: 1 key(s) remain to be installed -- if you are prompted now it is to install the new keys root@localhost's password: Permission denied, please try again. root@localhost's password: Number of key(s) added: 1 Now try logging into the machine, with: "ssh 'localhost'" and check to make sure that only the key(s) you wanted were added.

密钥发送

[root@hadoop01 ~]# ssh-copy-id 192.168.196.72 /usr/bin/ssh-copy-id: INFO: Source of key(s) to be installed: "/root/.ssh/id_rsa.pub" The authenticity of host '192.168.196.72 (192.168.196.72)' can't be established. ECDSA key fingerprint is SHA256:sd4vCsEraYT7vqvsW4egMtl9ctOCt9SkHQQWe0jK6BM. ECDSA key fingerprint is MD5:04:f4:c4:4b:8a:ae:a5:cf:de:52:16:40:db:b4:17:fc. Are you sure you want to continue connecting (yes/no)? yes /usr/bin/ssh-copy-id: INFO: attempting to log in with the new key(s), to filter out any that are already installed /usr/bin/ssh-copy-id: INFO: 1 key(s) remain to be installed -- if you are prompted now it is to install the new keys root@192.168.196.72's password: Number of key(s) added: 1 Now try logging into the machine, with: "ssh '192.168.196.72'" and check to make sure that only the key(s) you wanted were added. [root@hadoop01 ~]# ssh-copy-id 192.168.196.73 /usr/bin/ssh-copy-id: INFO: Source of key(s) to be installed: "/root/.ssh/id_rsa.pub" The authenticity of host '192.168.196.73 (192.168.196.73)' can't be established. ECDSA key fingerprint is SHA256:sd4vCsEraYT7vqvsW4egMtl9ctOCt9SkHQQWe0jK6BM. ECDSA key fingerprint is MD5:04:f4:c4:4b:8a:ae:a5:cf:de:52:16:40:db:b4:17:fc. Are you sure you want to continue connecting (yes/no)? yes /usr/bin/ssh-copy-id: INFO: attempting to log in with the new key(s), to filter out any that are already installed /usr/bin/ssh-copy-id: INFO: 1 key(s) remain to be installed -- if you are prompted now it is to install the new keys root@192.168.196.73's password: Number of key(s) added: 1 Now try logging into the machine, with: "ssh '192.168.196.73'" and check to make sure that only the key(s) you wanted were added.

登录测试

[root@hadoop01 ~]# ssh 192.168.196.72 Last login: Wed Mar 27 11:53:21 2024 from 192.168.196.1 [root@hadoop02 ~]# 可以看到ssh hadoop02免密登录👆 但切回去hadoop01要输入密码👇 [root@hadoop02 ~]# ssh 192.168.196.71 The authenticity of host '192.168.196.71 (192.168.196.71)' can't be established. ECDSA key fingerprint is SHA256:sd4vCsEraYT7vqvsW4egMtl9ctOCt9SkHQQWe0jK6BM. ECDSA key fingerprint is MD5:04:f4:c4:4b:8a:ae:a5:cf:de:52:16:40:db:b4:17:fc. Are you sure you want to continue connecting (yes/no)? yes Warning: Permanently added '192.168.196.71' (ECDSA) to the list of known hosts. root@192.168.196.71's password: Last failed login: Wed Mar 27 11:57:40 PDT 2024 from localhost on ssh:notty There was 1 failed login attempt since the last successful login. Last login: Wed Mar 27 11:52:21 2024 from 192.168.196.1 [root@hadoop01 ~]#

hadoop02、hadoop03按以上步骤生成密钥,发给另外两台,然后经行ssh免密登录测试。(此处略)

hadoop安装配置

新建用于存放hadoop的文件夹 [root@hadoop01 ~]# mkdir -p /export/servers 进入压缩包的存放目录 [root@hadoop01 ~]# cd /home/cps/Desktop/ [root@hadoop01 Desktop]# ls hadoop-2.7.1.tar.gz 解压 [root@hadoop01 Desktop]# tar -zxvf hadoop-2.7.1.tar.gz -C /export/servers/ 查看确定解压后的位置 [root@hadoop01 hadoop-2.7.1]# pwd /export/servers/hadoop-2.7.1 配置环境 [root@hadoop01 hadoop-2.7.1]# vi /etc/profile 追加 export HADOOP_HOME=/export/servers/hadoop-2.7.1 export PATH=$PATH:$HADOOP_HOME/bin:$HADOOP_HOME/sbin 更新 [root@hadoop01 hadoop-2.7.1]# source /etc/profile 查看Hadoop版本 [root@hadoop01 hadoop-2.7.1]# hadoop version Hadoop 2.7.1 Subversion https://git-wip-us.apache.org/repos/asf/hadoop.git -r 15ecc87ccf4a0228f35af08fc56de536e6ce657a Compiled by jenkins on 2015-06-29T06:04Z Compiled with protoc 2.5.0 From source with checksum fc0a1a23fc1868e4d5ee7fa2b28a58a This command was run using /export/servers/hadoop-2.7.1/share/hadoop/common/hadoop-common-2.7.1.jar [root@hadoop01 hadoop-2.7.1]#

其他两台一样安装配置(此处略)

0. 进入主节点配置目录/etc/hadoop/

[root@hadoop01 ~]# cd /export/servers/hadoop-2.7.1/etc/hadoop/ [root@hadoop01 hadoop]# ll total 156 -rw-r--r--. 1 10021 10021 4436 Jun 28 2015 capacity-scheduler.xml -rw-r--r--. 1 10021 10021 1335 Jun 28 2015 configuration.xsl -rw-r--r--. 1 10021 10021 318 Jun 28 2015 container-executor.cfg -rw-r--r--. 1 10021 10021 1123 Mar 29 01:12 core-site.xml -rw-r--r--. 1 10021 10021 3670 Jun 28 2015 hadoop-env.cmd -rw-r--r--. 1 10021 10021 4240 Mar 29 01:20 hadoop-env.sh -rw-r--r--. 1 10021 10021 2598 Jun 28 2015 hadoop-metrics2.properties -rw-r--r--. 1 10021 10021 2490 Jun 28 2015 hadoop-metrics.properties -rw-r--r--. 1 10021 10021 9683 Jun 28 2015 hadoop-policy.xml -rw-r--r--. 1 10021 10021 1132 Mar 29 01:21 hdfs-site.xml -rw-r--r--. 1 10021 10021 1449 Jun 28 2015 httpfs-env.sh -rw-r--r--. 1 10021 10021 1657 Jun 28 2015 httpfs-log4j.properties -rw-r--r--. 1 10021 10021 21 Jun 28 2015 httpfs-signature.secret -rw-r--r--. 1 10021 10021 620 Jun 28 2015 httpfs-site.xml -rw-r--r--. 1 10021 10021 3518 Jun 28 2015 kms-acls.xml -rw-r--r--. 1 10021 10021 1527 Jun 28 2015 kms-env.sh -rw-r--r--. 1 10021 10021 1631 Jun 28 2015 kms-log4j.properties -rw-r--r--. 1 10021 10021 5511 Jun 28 2015 kms-site.xml -rw-r--r--. 1 10021 10021 11237 Jun 28 2015 log4j.properties -rw-r--r--. 1 10021 10021 951 Jun 28 2015 mapred-env.cmd -rw-r--r--. 1 10021 10021 1431 Mar 29 01:28 mapred-env.sh -rw-r--r--. 1 10021 10021 4113 Jun 28 2015 mapred-queues.xml.template -rw-r--r--. 1 root root 950 Mar 29 01:29 mapred-site.xml -rw-r--r--. 1 10021 10021 758 Jun 28 2015 mapred-site.xml.template -rw-r--r--. 1 10021 10021 10 Jun 28 2015 slaves -rw-r--r--. 1 10021 10021 2316 Jun 28 2015 ssl-client.xml.example -rw-r--r--. 1 10021 10021 2268 Jun 28 2015 ssl-server.xml.example -rw-r--r--. 1 10021 10021 2250 Jun 28 2015 yarn-env.cmd -rw-r--r--. 1 10021 10021 4585 Mar 29 01:23 yarn-env.sh -rw-r--r--. 1 10021 10021 933 Mar 29 01:25 yarn-site.xml

1. 核心配置core-site.xml

[root@hadoop01 hadoop]# vi core-site.xml fs.defaultFS hdfs://192.168.196.71:9000 hadoop.tmp.dir /export/servers/hadoop-2.7.1/tmp2. HDFS配置hadoop-env.sh

[root@hadoop01 hadoop]# vi hadoop-env.sh 配置JAVA_HOME # The java implementation to use. export JAVA_HOME=/usr/java/jdk1.8.0_281-amd64

3. HDFS配置hdfs-site.xml

[root@hadoop01 hadoop]# vi hdfs-site.xml dfs.replication 3 dfs.namenode.secondary.http-address 192.168.196.73:500904. YARN配置yarn-env.sh

配置JAVA_HOME

5. YARN配置yarn-site.xml

[root@hadoop01 hadoop]# vi yarn-site.xml yarn.resourcemanager.hostname 192.168.196.72 yarn.nodemanager.aux-services mapreduce_shuffle6. MapReduce配置mapred-env.sh

配置JAVA_HOME

7. MapReduce配置mapred-site.xml

拷贝template模板 [root@hadoop01 hadoop]# cp mapred-site.xml.template mapred-site.xml [root@hadoop01 hadoop]# vi mapred-site.xml mapreduce.framework.name yarn集群分发配置文件

一、分发脚本xsync

[root@hadoop01 bin]# pwd /bin [root@hadoop01 bin]# vi xsync

#!/bin/bash #获取输入参数个数,如果没有参数,直接退出 pcount=$# if((pcount==0)); then echo no args; exit; fi #获取文件名称 p1= fname=`basename $p1` echo fname=$fname #获取上级目录到绝对路径 pdir=`~cd -P $(dirname Sp1); pwd` echo pdir=$pdir #获取当前用户名称 user=`whoami` #循环 for((host=72; host= 0 24/03/29 09:34:26 INFO util.ExitUtil: Exiting with status 0 24/03/29 09:34:26 INFO namenode.NameNode: SHUTDOWN_MSG: /************************************************************ SHUTDOWN_MSG: Shutting down NameNode at hadoop01/192.168.196.71 ************************************************************/

发现问题!!

[root@hadoop01 hadoop-2.7.1]# jps bash: jps: command not found...

/usr/bin 目录配置jps

[root@hadoop01 bin]# pwd /usr/bin [root@hadoop01 bin]# ln -s -f /usr/java/jdk1.8.0_281-amd64/bin/jps jps [root@hadoop01 bin]# jps 46708 Jps

启动 NameNode、DataNode

[root@hadoop01 bin]# cd /export/servers/hadoop-2.7.1 [root@hadoop01 hadoop-2.7.1]# jps 46629 Jps [root@hadoop01 hadoop-2.7.1]# sbin/hadoop-daemon.sh start namenode starting namenode, logging to /export/servers/hadoop-2.7.1/logs/hadoop-root-namenode-hadoop01.out [root@hadoop01 hadoop-2.7.1]# jps 49991 Jps 49944 NameNode [root@hadoop01 hadoop-2.7.1]# sbin/hadoop-daemon.sh start datanode starting datanode, logging to /export/servers/hadoop-2.7.1/logs/hadoop-root-datanode-hadoop01.out [root@hadoop01 hadoop-2.7.1]# jps 51479 DataNode 51527 Jps 49944 NameNode

hadoop02

启动DataNode

Last login: Fri Mar 29 09:33:25 2024 from 192.168.196.1 [root@hadoop02 ~]# cd /export/servers/hadoop-2.7.1 [root@hadoop02 hadoop-2.7.1]# sbin/hadoop-d~~t~~ aemon.sh start datanode starting datanode, logging to /export/servers/hadoop-2.7.1/logs/hadoop-root-datanode-hadoop02.out [root@hadoop02 hadoop-2.7.1]# jps bash: jps: command not found... [root@hadoop02 hadoop-2.7.1]# cd /usr/bin/ [root@hadoop02 bin]# pwd /usr/bin [root@hadoop02 bin]# ln -s -f /usr/java/jdk1.8.0_281-amd64/bin/jps jps [root@hadoop02 bin]# cd /export/servers/hadoop-2.7.1 [root@hadoop02 hadoop-2.7.1]# jps 34802 Jps 34505 DataNode

hadoop03

启动DataNode

Last login: Fri Mar 29 16:43:14 2024 from 192.168.196.1 [root@hadoop03 ~]# cd /usr/bin/ [root@hadoop03 bin]# ln -s -f /usr/java/jdk1.8.0_281-amd64/bin/jps jps [root@hadoop03 bin]# cd /export/servers/hadoop-2.7.1 [root@hadoop03 hadoop-2.7.1]# sbin/hadoop-daemon.sh start datanode starting datanode, logging to /export/servers/hadoop-2.7.1/logs/hadoop-root-datanode-hadoop03.out [root@hadoop03 hadoop-2.7.1]# jps 41073 DataNode 41125 Jps [root@hadoop03 hadoop-2.7.1]#

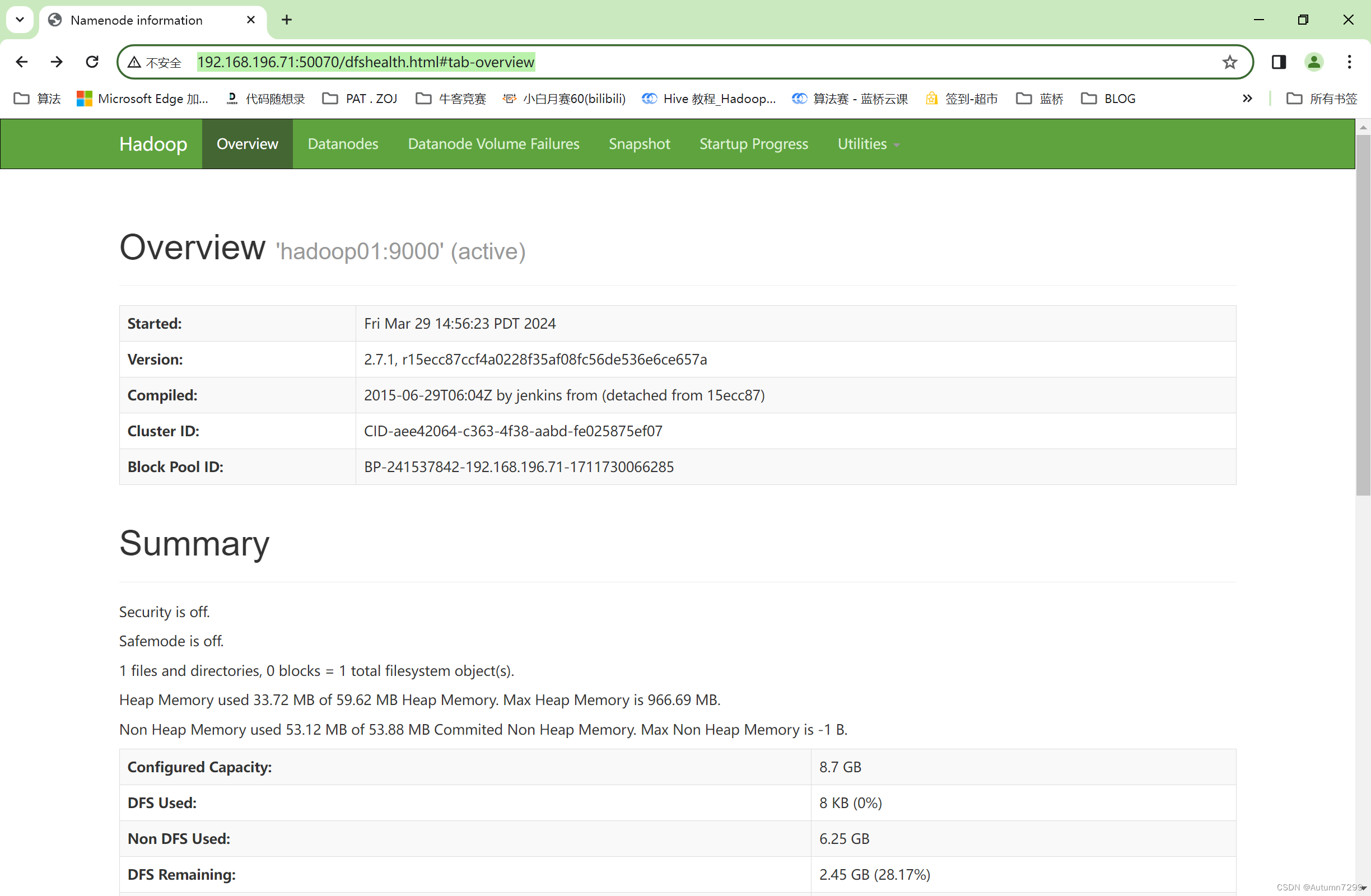

单启web测试

关闭防火墙,三台。

[root@hadoop01 ~]# systemctl stop firewalld

浏览器http://192.168.196.71:50070

集群群启与配置

配置slaves

[root@hadoop01 bin]# cd /export/servers/hadoop-2.7.1 [root@hadoop01 hadoop-2.7.1]# cd etc/ [root@hadoop01 etc]# cd hadoop/ [root@hadoop01 hadoop]# vi slaves [root@hadoop01 hadoop]# cat slaves 192.168.196.71 192.168.196.72 192.198.196.73

xsync脚本同步

[root@hadoop01 hadoop]# xsync slaves fname=slaves /usr/bin/xsync: line 15: ~cd: command not found pdir=/export/servers/hadoop-2.7.1/etc/hadoop ====== rsync -rvl /export/servers/hadoop-2.7.1/etc/hadoop/slaves root@192.168.196.72:/export/servers/hadoop-2.7.1/etc/hadoop ====== sending incremental file list slaves sent 137 bytes received 41 bytes 27.38 bytes/sec total size is 47 speedup is 0.26 ====== rsync -rvl /export/servers/hadoop-2.7.1/etc/hadoop/slaves root@192.168.196.73:/export/servers/hadoop-2.7.1/etc/hadoop ====== sending incremental file list slaves sent 137 bytes received 41 bytes 32.36 bytes/sec total size is 47 speedup is 0.26

检查分发情况

[root@hadoop02 hadoop-2.7.1]# cd etc/ [root@hadoop02 etc]# cd hadoop/ [root@hadoop02 hadoop]# cat slaves 192.168.196.71 192.168.196.72 192.198.196.73

hadoop03(此处略)

停止单节点与jps检查

[root@hadoop01 hadoop]# jps 51479 DataNode 49944 NameNode 90493 Jps [root@hadoop01 hadoop]# cd .. [root@hadoop01 etc]# cd .. [root@hadoop01 hadoop-2.7.1]# sbin/hadoop-daemon.sh stop datanode stopping datanode [root@hadoop01 hadoop-2.7.1]# sbin/hadoop-daemon.sh stop namenode stopping namenode [root@hadoop01 hadoop-2.7.1]# jps 90981 Jps [root@hadoop01 hadoop-2.7.1]#

[root@hadoop02 hadoop-2.7.1]# jps 34505 DataNode 73214 Jps [root@hadoop02 hadoop-2.7.1]# sbin/hadoop-daemon.sh stop datanode stopping datanode [root@hadoop02 hadoop-2.7.1]# jps 73382 Jps [root@hadoop02 hadoop-2.7.1]#

[root@hadoop03 hadoop]# cd .. [root@hadoop03 etc]# cd .. [root@hadoop03 hadoop-2.7.1]# jps 41073 DataNode 75572 Jps [root@hadoop03 hadoop-2.7.1]# sbin/hadoop-daemon.sh stop datanode stopping datanode [root@hadoop03 hadoop-2.7.1]# jps 75990 Jps

hadoop01启动HDFS(第1次,异常)

[root@hadoop01 hadoop-2.7.1]# sbin/start-dfs.sh Starting namenodes on [hadoop01] The authenticity of host 'hadoop01 (fe80::466a:550e:e194:cd87%ens33)' can't be established. ECDSA key fingerprint is SHA256:sd4vCsEraYT7vqvsW4egMtl9ctOCt9SkHQQWe0jK6BM. ECDSA key fingerprint is MD5:04:f4:c4:4b:8a:ae:a5:cf:de:52:16:40:db:b4:17:fc. Are you sure you want to continue connecting (yes/no)? yes hadoop01: Warning: Permanently added 'hadoop01,fe80::466a:550e:e194:cd87%ens33' (ECDSA) to the list of known hosts. hadoop01: starting namenode, logging to /export/servers/hadoop-2.7.1/logs/hadoop-root-namenode-hadoop01.out The authenticity of host '192.168.196.71 (192.168.196.71)' can't be established. ECDSA key fingerprint is SHA256:sd4vCsEraYT7vqvsW4egMtl9ctOCt9SkHQQWe0jK6BM. ECDSA key fingerprint is MD5:04:f4:c4:4b:8a:ae:a5:cf:de:52:16:40:db:b4:17:fc. Are you sure you want to continue connecting (yes/no)? 192.168.196.72: starting datanode, logging to /export/servers/hadoop-2.7.1/logs/hadoop-root-datanode-hadoop02.out 192.198.196.73: ssh: connect to host 192.198.196.73 port 22: Connection refused yes 192.168.196.71: Warning: Permanently added '192.168.196.71' (ECDSA) to the list of known hosts. 192.168.196.71: starting datanode, logging to /export/servers/hadoop-2.7.1/logs/hadoop-root-datanode-hadoop01.out Starting secondary namenodes [192.168.196.73] 192.168.196.73: starting secondarynamenode, logging to /export/servers/hadoop-2.7.1/logs/hadoop-root-secondarynamenode-hadoop03.out

发现问题!!!

hadoop01: starting namenode, 正常 192.168.196.72: starting datanode, 正常 192.198.196.73: ssh: connect to host 192.198.196.73 port 22: Connection refused ==> 异常 192.168.196.71: starting datanode, 正常 192.168.196.73: starting secondarynamenode, 正常

停止start-dfs.sh,jps查看各节点情况,确保datanode、namenode、SecondaryNameNode 全都关掉。

检查cd /export/servers/hadoop-2.7.1目录下是否存在logs和data。删除 rm -rf logs/,三台机都要查!!!

主节点使用scp同步到另外两台机

scp拷贝同步

[root@hadoop01 ~]# scp /etc/profile 192.168.196.72:/etc/profile profile 100% 2049 664.2KB/s 00:00 [root@hadoop01 ~]# scp /etc/profile 192.168.196.73:/etc/profile profile 100% 2049 535.7KB/s 00:00 [root@hadoop01 ~]# scp -r /export/ 192.168.196.72:/ [root@hadoop01 ~]# scp -r /export/ 192.168.196.73:/

hadoop01启动HDFS(第2次,正常)

[root@hadoop01 etc]# start-dfs.sh Starting namenodes on [hadoop01] hadoop01: starting namenode, logging to /export/servers/hadoop-2.7.1/logs/hadoop-root-namenode-hadoop01.out 192.168.196.73: starting datanode, logging to /export/servers/hadoop-2.7.1/logs/hadoop-root-datanode-hadoop03.out 192.168.196.72: starting datanode, logging to /export/servers/hadoop-2.7.1/logs/hadoop-root-datanode-hadoop02.out 192.168.196.71: starting datanode, logging to /export/servers/hadoop-2.7.1/logs/hadoop-root-datanode-hadoop01.out Starting secondary namenodes [192.168.196.73] 192.168.196.73: starting secondarynamenode, logging to /export/servers/hadoop-2.7.1/logs/hadoop-root-secondarynamenode-hadoop03.out

jps查各节点进程

[root@hadoop01 hadoop-2.7.1]# jps 6033 Jps 5718 NameNode 5833 DataNode [root@hadoop01 hadoop-2.7.1]#

[root@hadoop02 hadoop-2.7.1]# jps 5336 DataNode 5390 Jps [root@hadoop02 hadoop-2.7.1]#

[root@hadoop03 hadoop-2.7.1]# jps 5472 SecondaryNameNode 5523 Jps 5387 DataNode [root@hadoop03 hadoop-2.7.1]#

hadoop02启动YARN(第1次,异常)

[root@hadoop02 hadoop-2.7.1]# start-yarn.sh starting yarn daemons starting resourcemanager, logging to /export/servers/hadoop-2.7.1/logs/yarn-root-resourcemanager-hadoop02.out 192.168.196.71: starting nodemanager, logging to /export/servers/hadoop-2.7.1/logs/yarn-root-nodemanager-hadoop01.out 192.168.196.72: starting nodemanager, logging to /export/servers/hadoop-2.7.1/logs/yarn-root-nodemanager-hadoop02.out 192.168.196.73: starting nodemanager, logging to /export/servers/hadoop-2.7.1/logs/yarn-root-nodemanager-hadoop03.out [root@hadoop02 hadoop-2.7.1]# jps 12035 NodeManager 11911 ResourceManager 12409 Jps

[root@hadoop03 hadoop-2.7.1]# jps 11890 Jps 11638 SecondaryNameNode 11852 NodeManager

[root@hadoop01 current]# jps 13206 NodeManager 12791 NameNode 13375 Jps

发现问题!!!

NodeManager和DataNode进程同时只能工作一个。

原因: -format 在格式化后会生成一个clusterld(集群id),后续格式化新生成的clusterld会与未删除的产生冲突。

解决:

在格式化之前,先删除之前格式化 -format产生的信息(默认在/tmp,如果配置了该目录,那就去你配置的目录,具体查xml文件)rm -rf tmp/ logs/

[root@hadoop01 hadoop-2.7.1]# rm -rf tmp/ logs/ [root@hadoop01 hadoop-2.7.1]# ll total 28 drwxr-xr-x. 2 10021 10021 194 Jun 28 2015 bin drwxr-xr-x. 3 10021 10021 20 Jun 28 2015 etc drwxr-xr-x. 2 10021 10021 106 Jun 28 2015 include drwxr-xr-x. 3 10021 10021 20 Jun 28 2015 lib drwxr-xr-x. 2 10021 10021 239 Jun 28 2015 libexec -rw-r--r--. 1 10021 10021 15429 Jun 28 2015 LICENSE.txt -rw-r--r--. 1 10021 10021 101 Jun 28 2015 NOTICE.txt -rw-r--r--. 1 10021 10021 1366 Jun 28 2015 README.txt drwxr-xr-x. 2 10021 10021 4096 Jun 28 2015 sbin drwxr-xr-x. 4 10021 10021 31 Jun 28 2015 share

(略)其他两台机一样的操作:rm -rf tmp/ logs/

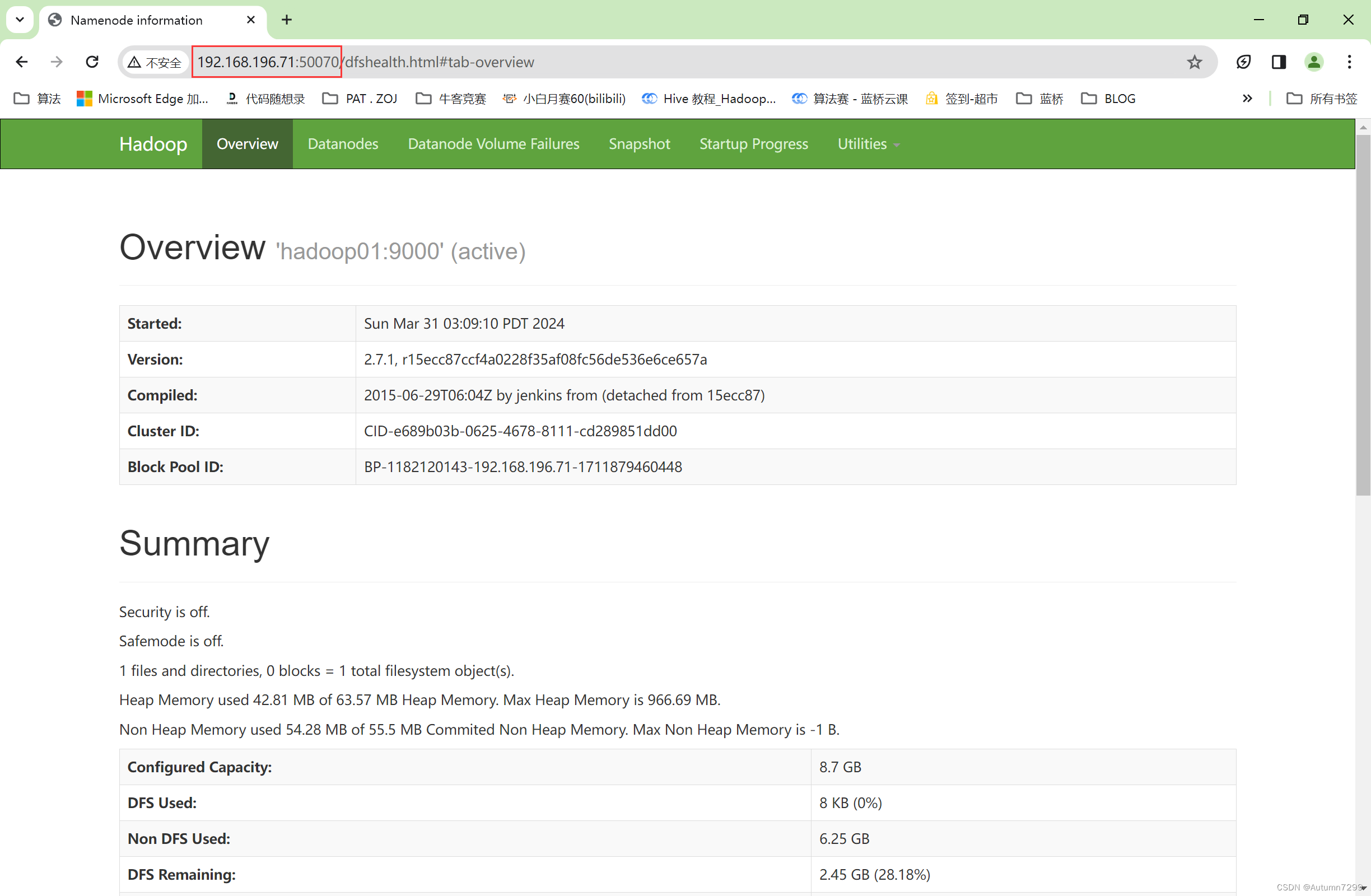

hadoop02启动YARN(第2次,正常,hdfs+yarn完整启动)

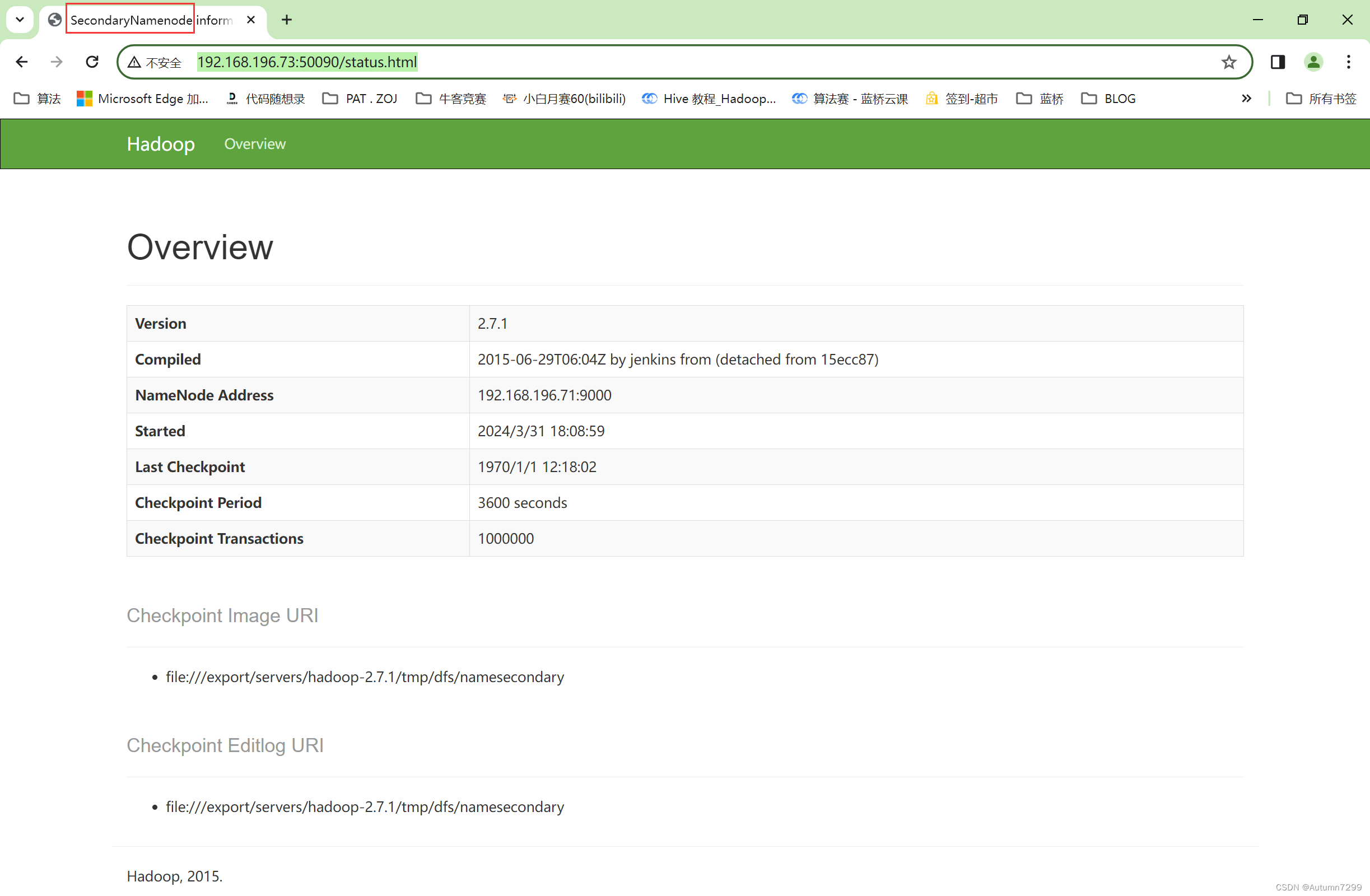

1. NameNode格式化 [root@hadoop01 hadoop-2.7.1]# hdfs namenode -format (略。。。) 24/03/31 03:04:20 INFO namenode.FSImage: Allocated new BlockPoolId: BP-1182120143-192.168.196.71-1711879460448 24/03/31 03:04:20 INFO common.Storage: Storage directory /export/servers/hadoop-2.7.1/tmp/dfs/name has been successfully formatted. 24/03/31 03:04:20 INFO namenode.NNStorageRetentionManager: Going to retain 1 images with txid >= 0 24/03/31 03:04:20 INFO util.ExitUtil: Exiting with status 0 24/03/31 03:04:20 INFO namenode.NameNode: SHUTDOWN_MSG: /************************************************************ SHUTDOWN_MSG: Shutting down NameNode at hadoop01/192.168.196.71 ************************************************************/ 2.查看进程 [root@hadoop01 hadoop-2.7.1]# jps 17391 Jps [root@hadoop02 hadoop-2.7.1]# jps 16333 Jps [root@hadoop03 hadoop-2.7.1]# jps 16181 Jps 3.hadoop01启动hafs [root@hadoop01 hadoop-2.7.1]# start-dfs.sh Starting namenodes on [hadoop01] hadoop01: starting namenode, logging to /export/servers/hadoop-2.7.1/logs/hadoop-root-namenode-hadoop01.out 192.168.196.73: starting datanode, logging to /export/servers/hadoop-2.7.1/logs/hadoop-root-datanode-hadoop03.out 192.168.196.72: starting datanode, logging to /export/servers/hadoop-2.7.1/logs/hadoop-root-datanode-hadoop02.out 192.168.196.71: starting datanode, logging to /export/servers/hadoop-2.7.1/logs/hadoop-root-datanode-hadoop01.out Starting secondary namenodes [192.168.196.73] 192.168.196.73: starting secondarynamenode, logging to /export/servers/hadoop-2.7.1/logs/hadoop-root-secondarynamenode-hadoop03.out [root@hadoop01 hadoop-2.7.1]# 4.查看进程 [root@hadoop01 hadoop-2.7.1]# jps 18183 Jps 17833 DataNode 17727 NameNode [root@hadoop02 hadoop-2.7.1]# jps 16705 Jps 16493 DataNode [root@hadoop03 hadoop-2.7.1]# jps 16438 SecondaryNameNode 16360 DataNode 16638 Jps 5.hadoop02启动yarn [root@hadoop02 hadoop-2.7.1]# start-yarn.sh starting yarn daemons starting resourcemanager, logging to /export/servers/hadoop-2.7.1/logs/yarn-root-resourcemanager-hadoop02.out 192.168.196.71: starting nodemanager, logging to /export/servers/hadoop-2.7.1/logs/yarn-root-nodemanager-hadoop01.out 192.168.196.73: starting nodemanager, logging to /export/servers/hadoop-2.7.1/logs/yarn-root-nodemanager-hadoop03.out 192.168.196.72: starting nodemanager, logging to /export/servers/hadoop-2.7.1/logs/yarn-root-nodemanager-hadoop02.out 6.查看进程 [root@hadoop01 hadoop-2.7.1]# jps 18305 NodeManager 18373 Jps 17833 DataNode 17727 NameNode [root@hadoop02 hadoop-2.7.1]# jps 16995 Jps 16917 NodeManager 16809 ResourceManager 16493 DataNode [root@hadoop03 hadoop-2.7.1]# jps 16755 NodeManager 16438 SecondaryNameNode 16360 DataNode 18028 Jps

Web测试

HDFS:NameNode、DataNode

[root@hadoop01 ~]# systemctl stop firewalld

http://192.168.196.71:50070/

[root@hadoop03 hadoop-2.7.1]# systemctl stop firewalld

http://192.168.196.73:50090/

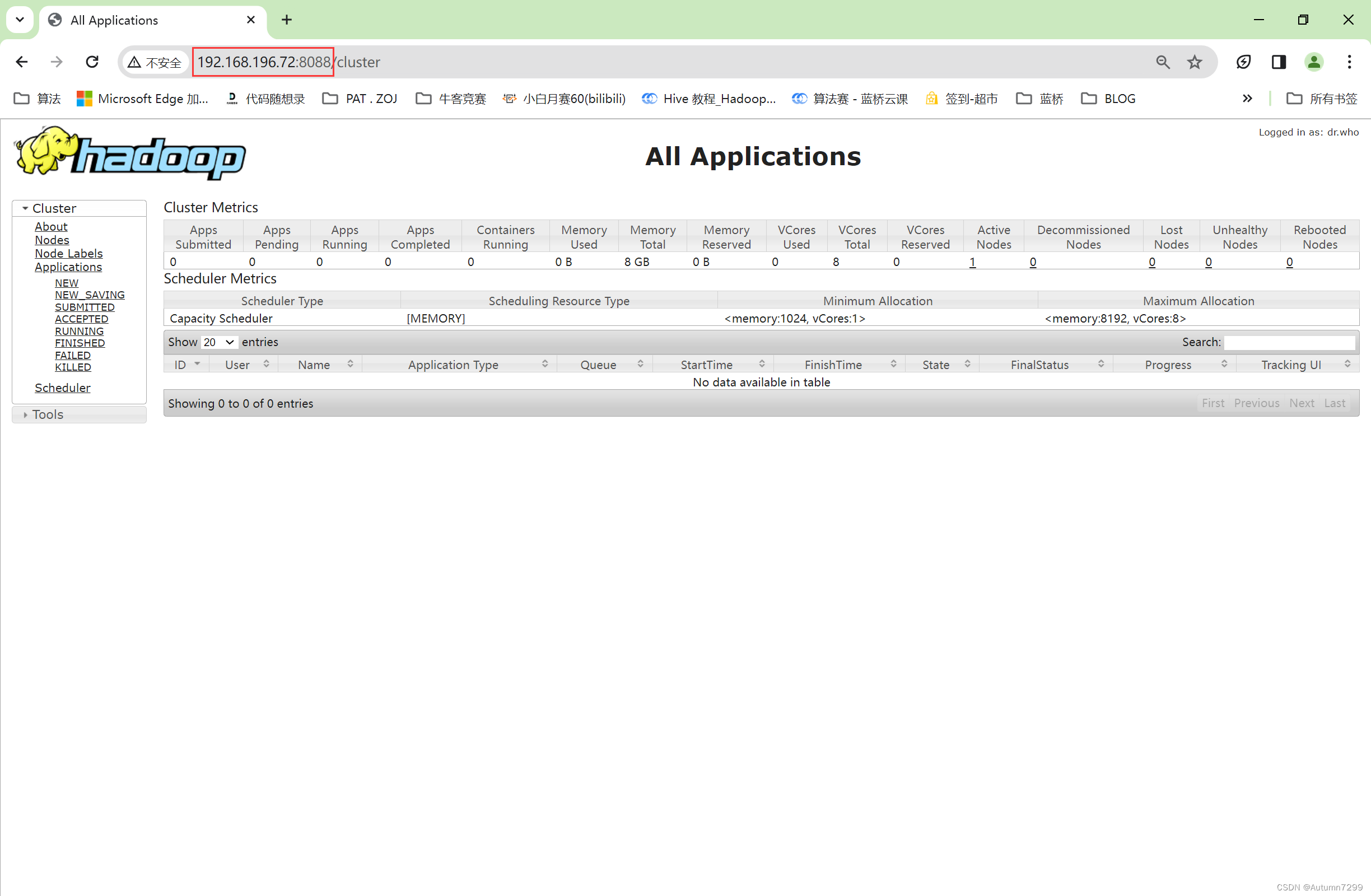

YARN:ResourceManager

[root@hadoop02 ~]# systemctl stop firewalld

http://192.168.196.72:8088

可能会遇到的坑(小结)

启动时,集群会生成一个id,相关信息会存放在 /tmp 、 /logs 目录下,如果实验出现问题需要重做时,为避免下次启动格式化时生成新的id与原有的冲突,-format 格式化前要注意:

首先jsp确认进程全部关闭,然后删除rm -rf tmp/ logs/这两个目录。/logs与hdfs相关,/tmp与yarn相关。

web测试无法打开时,查访问链接对应的主机的防火墙是否关闭systemctl stop firewalld

jps检查进程已没有,但是重新启动时,进程已经开启,在linux的根目录下/tmp存在启动的进程临时文件,将集群相关进程删除掉,再重新启动集群。

jps不生效问题:

先查环境JAVA_HOME,用 java -version验证。

如果环境没问题,进入/usr/bin 目录,配置 ln -s -f /usr/java/jdk1.8.0_211/bin/jps jps

jps命令配置(jps: command not found)

yarn和hfds配置如果不在一台机子,web访问的IP要注意修改,相关配置在:

hdfs 👉 core-site.xml

yarn 👉 yarn-site.xml

本还想做mapreduce单词计数的,坑有点多,搞了好久,以后有机会再补上吧。

还没有评论,来说两句吧...